Retrieval Augmented Generation: The Smart Edge Powering Trusted AI

Retrieval Augmented Generation: The Smart Edge Powering Trusted AI

Artificial intelligence has crossed the phase where generating human-like language is impressive. Enterprises are now focused on a harder problem: whether AI systems can deliver answers that are accurate, current, explainable, and safe to rely on. This shift has forced organizations to re-evaluate how intelligence is built, deployed, and governed. At the center of this shift sits retrieval augmented generation.

In enterprise environments, fluency without factual grounding creates risk. A system that sounds confident but produces incorrect or outdated information undermines trust faster than no automation at all. This is why retrieval augmented generation is no longer viewed as an enhancement to generative AI, but as a foundational architecture for deploying trusted AI at scale.

This blog examines why retrieval augmented generation has become essential for modern enterprise AI, how it enables reliable decision-making through knowledge grounding, and why organizations that ignore this pattern struggle to move beyond experimentation.

The Enterprise AI Reality Check

Early enterprise AI initiatives focused on speed and automation. Chatbots reduced ticket volumes. Models summarized documents. Internal copilots boosted productivity in isolated workflows. These gains were real, but limited.

As AI systems moved closer to core business processes, new risks surfaced. Models produced responses that were linguistically correct but factually wrong. Internal policies changed while AI answers did not. Compliance teams flagged hallucinations as unacceptable. Business users lost confidence.

The core issue was not model intelligence. It was the absence of grounding.

This is the environment in which retrieval augmented generation emerged as a corrective architecture rather than a feature. Enterprises realized that intelligence without access to authoritative data could not be trusted.

What Retrieval Augmented Generation Actually Solves

At a structural level, retrieval augmented generation solves a simple but critical problem. Generative models do not know what your organization knows. They know what they were trained on.

This creates a disconnect between enterprise reality and AI output. Retrieval augmented generation bridges that gap by allowing AI systems to fetch relevant, approved information at the moment a response is generated.

Instead of relying on static training data, the system retrieves knowledge dynamically and uses it to shape the final answer. This approach transforms AI from a probabilistic generator into a context-aware reasoning system.

For enterprises, this distinction defines whether AI is a liability or an asset.

How Retrieval Augmented Generation Works in Practice

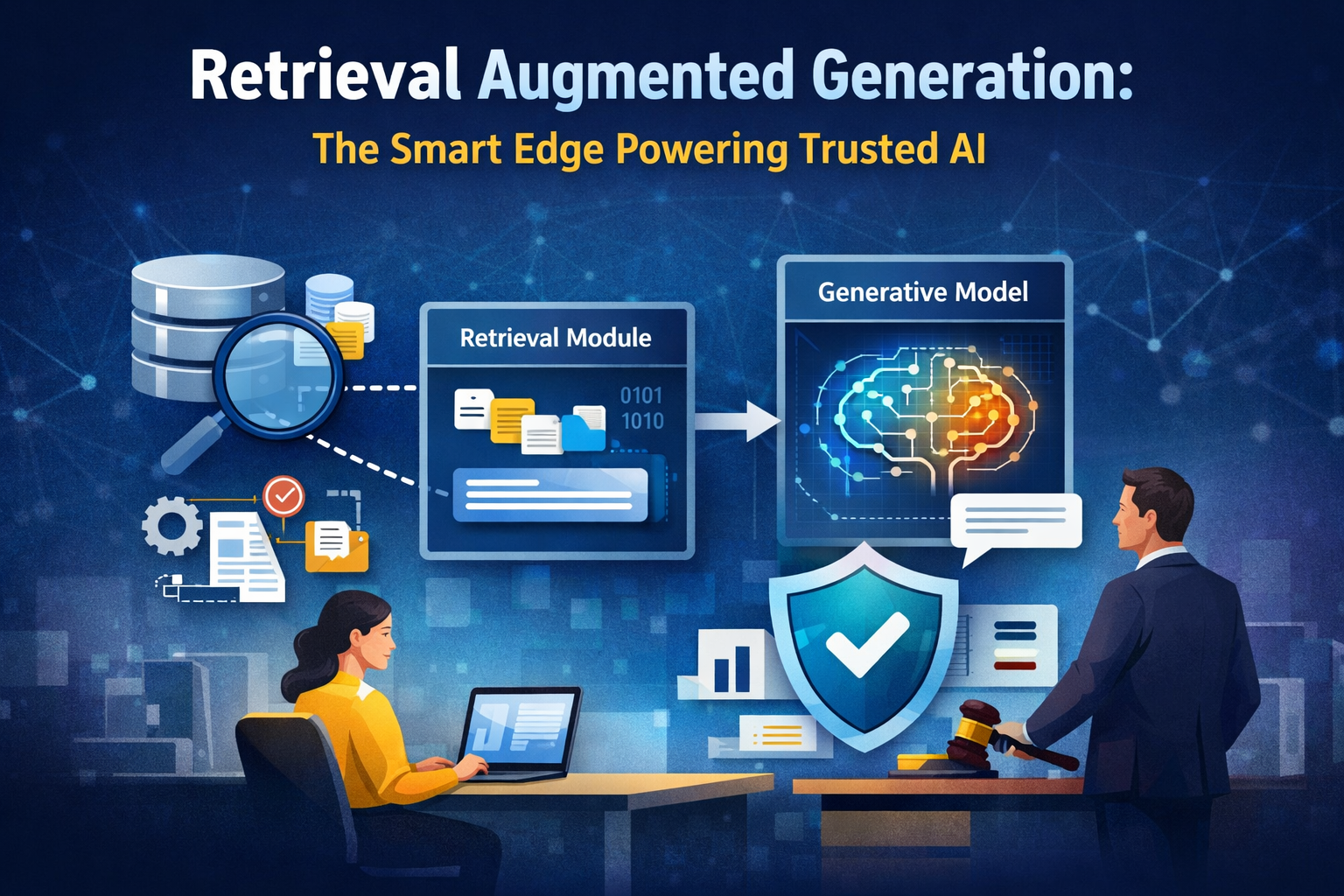

In a production-grade setup, retrieval augmented generation operates as a pipeline with clear responsibilities.

First, the user query is interpreted semantically rather than through keyword matching. The system understands intent, not just phrasing. Next, relevant information is retrieved from approved sources using vector search, hybrid retrieval, or structured queries. These sources may include internal documents, databases, APIs, or knowledge bases.

Finally, the retrieved context is injected into the prompt used by the generative model. The model generates a response constrained by that context.

The model handles language and reasoning. The retrieval layer handles truth.

This separation is the reason retrieval augmented generation scales so well in enterprise environments.

Why Generative Models Alone Fail in Enterprise Settings

Generative models excel at producing coherent language. They do not excel at maintaining factual guarantees. This limitation is acceptable in consumer applications. In enterprise use cases, it introduces unacceptable risk.

A customer support system that hallucinates policy terms creates liability. A financial assistant that misstates rates damages trust. A compliance assistant that references outdated regulations creates exposure.

Retrieval augmented generation exists to solve this exact problem. By grounding responses in verified sources, it reduces hallucinations and aligns AI output with business reality.

This is why most enterprise AI failures are not model failures. They are data access failures.

Knowledge Grounding as the Backbone of Trusted AI

Knowledge grounding is the defining advantage of retrieval augmented generation. It ensures that every response is anchored in a known source of truth.

In enterprise systems, knowledge grounding means more than accuracy. It means governance. Organizations decide which data sources are authoritative, who can access them, and how they are updated.

This capability is essential for building trusted AI. Without grounding, AI systems remain opaque. With grounding, they become auditable and predictable.

Knowledge grounding also allows enterprises to update information without retraining models. Policies change. Prices change. Regulations change. Retrieval augmented generation reflects those changes immediately.

Retrieval Augmented Generation vs Fine-Tuning

Many organizations initially attempt to solve enterprise AI challenges through fine-tuning. While fine-tuning improves tone and behavior, it does not solve knowledge freshness or governance.

Fine-tuning embeds knowledge into the model at training time. Updating that knowledge requires retraining. This approach is slow, expensive, and brittle.

Retrieval augmented generation avoids this trap. Data lives outside the model. Knowledge updates propagate instantly. Models remain stable while information evolves.

Fine-tuning teaches the model how to respond. Retrieval augmented generation teaches it what to respond with.

Enterprise AI Use Cases That Depend on Retrieval Augmented Generation

The more knowledge-intensive the use case, the more critical retrieval augmented generation becomes.

In customer support, AI systems retrieve product documentation, warranty terms, and escalation policies to answer complex questions accurately. In sales, assistants retrieve pricing rules, contract clauses, and historical deal data to support negotiations. In compliance, systems retrieve regulatory texts and internal guidelines to ensure responses align with current rules.

In each case, enterprise AI systems rely on retrieval augmented generation to operate safely and at scale.

Without retrieval, these systems either hallucinate or require constant human supervision.

Trusted AI Requires Architectural Trust, Not Just Model Trust

Trust in AI is not achieved through larger models or better prompts alone. It is achieved through architecture.

Trusted AI systems are designed so that incorrect behavior is difficult by default. Retrieval augmented generation enforces this by constraining the model’s output space to verified information.

This approach also supports transparency. Enterprises can trace responses back to source documents. This traceability is essential for audits, compliance reviews, and internal validation.

Trust emerges when systems behave predictably under pressure. Retrieval augmented generation enables that predictability.

Data Freshness and Operational Agility

One of the most underestimated advantages of retrieval augmented generation is operational agility.

Because information is retrieved at runtime, AI systems always operate on the latest data. This eliminates delays caused by retraining cycles and reduces operational overhead.

For enterprises operating in regulated or fast-changing environments, this capability is critical. AI systems can adapt as quickly as the data they rely on.

This agility is a key reason retrieval augmented generation has become central to modern enterprise AI platforms.

Security, Access Control, and Governance

Concerns about AI accessing sensitive data are valid. Retrieval augmented generation addresses these concerns by strengthening governance rather than weakening it.

Retrieval layers can enforce permissions. Sensitive data can be segmented. Access can be logged and audited. Responses can be traced to their sources.

This level of control is difficult to achieve with purely generative systems. Retrieval augmented generation aligns AI behavior with existing security and governance frameworks.

For regulated industries, this alignment is non-negotiable.

Answer Engine Optimization and the Shift to Direct Answers

Search behavior is evolving. Users increasingly expect direct answers instead of lists of documents. This shift has implications both internally and externally.

Internally, employees want AI copilots that answer questions using organizational knowledge. Externally, customers expect precise responses without navigating documentation.

Retrieval augmented generation aligns perfectly with this expectation. It enables AI systems to function as answer engines rather than search interfaces.

From an AEO standpoint, this architecture produces responses that are concise, contextual, and grounded. These are the qualities answer engines prioritize.

Common Implementation Mistakes to Avoid

Despite its benefits, retrieval augmented generation can fail if implemented poorly.

Low-quality data leads to low-quality answers. Weak retrieval relevance undermines trust. Poor prompt construction reduces clarity. Ignoring latency constraints degrades user experience.

Successful implementations treat retrieval quality as seriously as model performance. They invest in data curation, retrieval evaluation, and continuous monitoring.

When done right, retrieval augmented generation becomes a durable competitive advantage rather than a fragile experiment.

The Role of Retrieval Augmented Generation in Agentic AI

As organizations move toward agentic AI systems that plan, reason, and act autonomously, retrieval augmented generation becomes even more critical.

Agents must continuously retrieve information, evaluate context, and make decisions. Without reliable retrieval, autonomy becomes unsafe.

Retrieval augmented generation provides the grounding layer that allows agents to operate within defined knowledge boundaries. This makes it a foundational component of next-generation enterprise AI architectures.

Why Retrieval Augmented Generation Is a Strategic Decision

Adopting retrieval augmented generation is not a technical optimization. It is a strategic choice about how intelligence operates within an organization.

It determines whether AI systems can be trusted. It defines how quickly knowledge updates propagate. It shapes governance and compliance posture.

Organizations that adopt this architecture early move faster with less risk. Those that delay often find themselves constrained by brittle systems and manual oversight.

Conclusion: The Smart Edge Powering Trusted AI

The future of enterprise AI belongs to systems that reason with facts, not just language. Retrieval augmented generationdelivers that capability by grounding intelligence in real data.

By enabling knowledge grounding, supporting governance, and aligning AI outputs with organizational truth, retrieval augmented generation becomes the smart edge that powers trusted AI.

For enterprises serious about deploying AI in production, this architecture is no longer optional. It is foundational.