What Causes Latency in Voice AI? How to Overcome It

What Causes Latency in Voice AI? How to Overcome It

Voice AI is powerful only when it feels instant. Even a 500-millisecond delay can break customer experience. Here’s what causes latency and how to fix it.

TABLE OF CONTENTS

- Introduction

- What Is Latency in Voice AI?

- Why Latency Matters for Business Performance

- How Voice AI Processing Actually Works

- Best Practices to Reduce Latency

- Common Mistakes That Increase Latency

- How Gnani.ai Solves Latency Challenges

- Conclusion

- FAQ Section

- Related Articles

INTRODUCTION

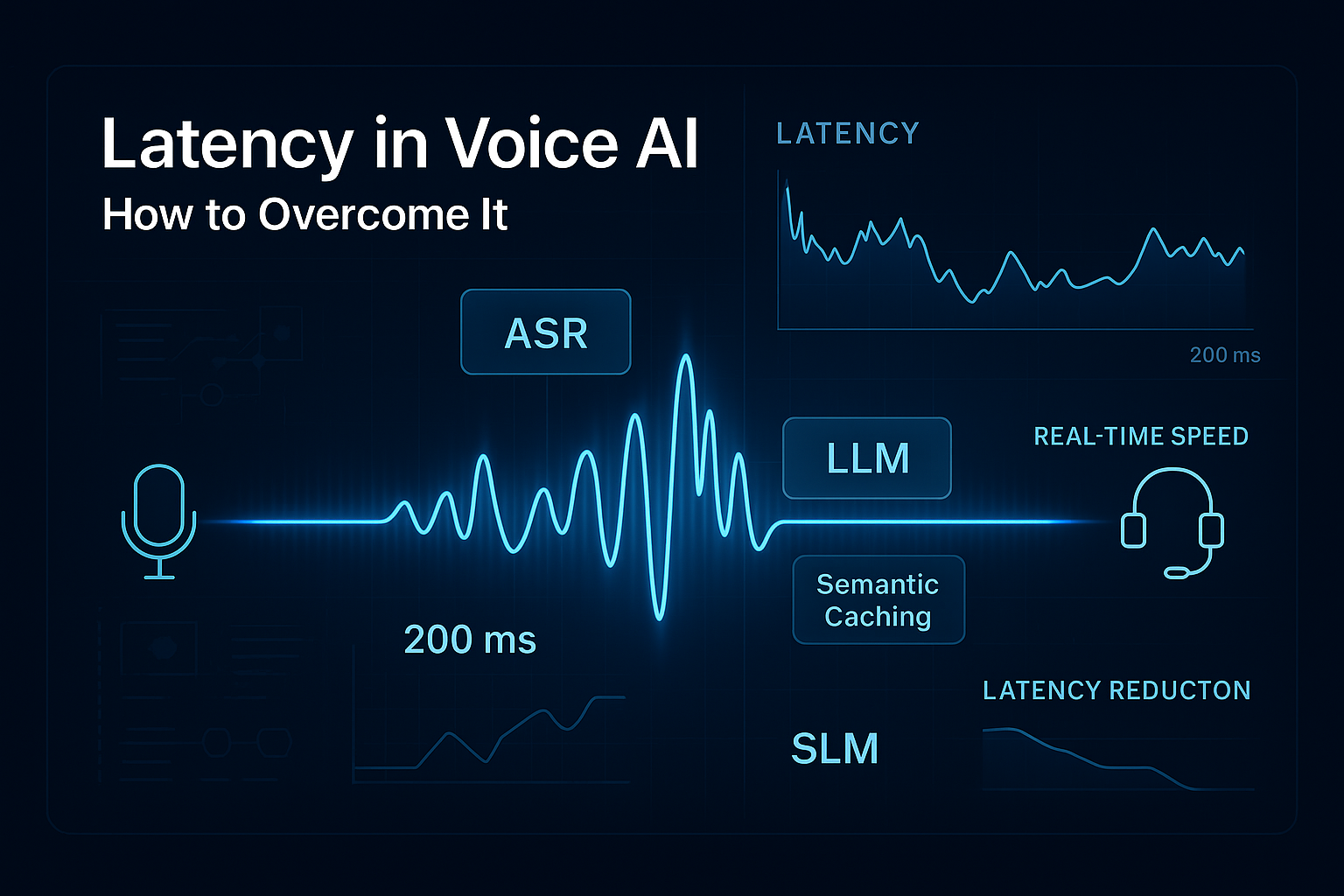

Latency in voice AI decides whether a conversation feels natural or robotic. When a customer speaks to a voice bot and waits one or two seconds for a reply, the entire interaction breaks. Studies show that humans perceive delays above 200 milliseconds as slow, and anything above 500 milliseconds disrupts conversational flow.

This article explains what causes latency in voice AI, why it matters for industries like banking, customer service, HR, and e-commerce, and the exact strategies enterprises can use to achieve real-time voice performance. The goal is to help decision-makers optimize latency voice AI issues and deploy responsive, effective systems.

By the end, you’ll understand the technical pipeline, common mistakes, best practices, and an enterprise-ready approach to reducing conversational latency.

What Is Latency in Voice AI?

Latency is the total time a voice AI system takes to hear a user’s speech, understand it, decide what to say, and speak back. This includes:

- Audio capture

- ASR (speech-to-text)

- NLU/LLM processing

- Backend system calls

- TTS (text-to-speech) generation

- Network transfer across each stage

A real-time system should ideally respond within 250–500 milliseconds. Anything above 700–900 milliseconds feels slow.

Example

A simple timeline:

User Speech → ASR (200ms) → LLM (300ms) → TTS (200ms) → Final Response

Total: 700ms

This makes latency voice AI a measurable, optimizable performance indicator.

Why Latency Matters for Business Performance

Latency directly impacts customer satisfaction, conversion rates, and operational outcomes.

1. Banking & Financial Services

Delays during fraud verification, loan servicing, or KYC verification lead to customer anxiety and poor containment rates.

J.D. Power reports that 68 percent of customers drop calls when automated systems feel slow.

2. E-commerce & Customer Support

A one-second delay can reduce customer satisfaction by up to 16 percent (Forrester).

Faster voice bot speed leads to:

- Higher first-call resolution

- Fewer abandoned calls

- Faster issue resolution

3. HR & Recruitment Automation

Latency slows screening calls, onboarding interactions, and knowledge queries. Enterprises see:

- 20–30 percent longer processing times

- Poorer candidate engagement

- Reduced automation efficiency

4. Competitive Differentiation

Real-time voice gives companies a strategic advantage:

- Faster workflows

- Smoother customer experiences

- Higher automation accuracy

- Reduced operational load

Low latency = higher ROI across enterprise functions.

How Voice AI Processing Actually Works

Latency comes from each computational block inside the voice AI pipeline. Here’s the breakdown.

Step-by-Step Flow

1. Audio Capture

Microphone input is streamed in real-time. Latency here depends on:

- Codec compression

- Signal processing

- Noise suppression

2. ASR (Automatic Speech Recognition)

This converts speech to text. ASR latency depends on:

- Model size

- Acoustic model quality

- Language complexity

- GPU/TPU availability

3. NLU / LLM Processing

This interprets user intent.

Latency depends on:

- Model size (LLM vs SLM)

- Context window

- Prompt length

- Real-time inference hardware

4. Backend API Calls

If the bot needs to fetch account data, perform KYC checks, or trigger workflows, API delays add 150–300ms on average.

Semantic Caching

A major latency advantage comes from semantic caching. When the system has already answered a similar question - even if phrased differently - the LLM can quickly match intent and reuse the previous response.

Examples:

- “What’s my EMI date?”

- “When is my installment due?”

- “When do I need to pay this month?”

All three map to the same semantic meaning.

Instead of running a full ASR → LLM → API call pipeline again, the system retrieves the prior answer, cutting latency to under 100 to 200 milliseconds.

This also reduces backend traffic and improves scalability.

5. TTS (Text-to-Speech)

Generates natural speech. Neural TTS models typically add 100–200ms.

6. Network & Routing

Cloud architecture, region selection, and routing affect 40–300ms.

Best Practices to Reduce Latency

Here are proven ways to implement low latency voice systems.

1. Use Streaming ASR and TTS

Instead of waiting for complete sentences:

- Stream partial transcripts

- Start generating audio before full response

This reduces conversational latency by 40–60 percent.

2. Deploy Models Closer to Users (Edge/Regional Hosting)

Regional GPU hosting cuts network delays by 100–200ms.

3. Use Smaller, Fine-Tuned Models

Replacing large general LLMs with small domain-specific models:

- 2–3x faster

- Same business accuracy

- Reduced GPU load

4. Minimize Backend Hops

Consolidate:

- Databases

- Identity checks

- External APIs

Fewer hops = fewer milliseconds wasted.

5. Use Low-Latency Audio Codecs

Prefer codecs like Opus or PCM for real-time voice.

6. Use Small Language Models (SLMs) for Domain Tasks

SLMs reduce latency because they operate on a much smaller, domain-trained dataset.

Instead of searching through a massive general-purpose knowledge space like large LLMs, SLMs scan a tight, industry-specific vocabulary.

This leads to:

- Faster inference time (less computation per token)

- Shorter context windows

- More predictable responses

- Reduced GPU load

Because the model already “knows” the domain (banking, insurance, e-commerce), it doesn’t need to evaluate irrelevant possibilities.

This alone can reduce response time by 30–50 percent in real-world deployments.

Common Mistakes That Increase Latency

1. Using Overly Large LLMs

Large LLMs generate slow responses and increase operational costs.

2. Running Models in Non-Optimal Regions

Hosting voice services far from user locations adds unnecessary delay.

3. Sequential Instead of Parallel Processing

Running ASR → NLU → TTS sequentially rather than streaming increases overall delay.

4. Too Many Backend API Calls

Creating multiple synchronous calls adds cumulative latency.

5. No Caching Layer

Not caching FAQs or static responses can add 200ms per request.

Each mistake compounds into major conversational latency.

How Gnani.ai Solves Latency Challenges

Gnani.ai uses a real-time, low-latency voice architecture optimized for enterprise-grade workloads. Here's how it reduces latency at each stage.

1. Streaming ASR and TTS Pipelines

Gnani.ai's streaming ASR processes audio while the user is still speaking, enabling sub-300ms partial responses.

2. Domain-Specific Small Language Models (SLMs)

SLMs trained for BFSI, e-commerce, and HR deliver:

- Faster inference

- Higher accuracy

- Lower GPU cost

3. Multilingual, Region-Hosted GPU Clusters

Deployable across India, APAC, Middle East, and US regions for real-time performance.

4. Autonomous Agentic Architecture

Reduces backend calls by embedding business logic in the agent.

5. Human-Like Voice with Low-Millisecond TTS

Optimized neural TTS delivers natural voice with minimal delay.

Internal links included as required:

- Learn more about the platform at Gnani.ai

- Explore advanced agent capabilities on Inya.ai

- See marketing and automation use cases at Marketing Automation AI

6. Domain SLMs with Built-In Semantic Memory

Gnani.ai’s SLM architecture is designed for low-latency enterprise workloads.

Because each SLM is pre-trained on industry-specific patterns, it searches a smaller, optimized domain - delivering faster intent recognition and response generation.

Combined with semantic caching, Gnani.ai agents can recognize repeated or paraphrased queries and respond instantly without reprocessing every layer.

This provides real-time voice performance, improves throughput, and reduces API dependency.

CONCLUSION

Latency defines the quality of any voice AI interaction. When response times exceed user expectations, trust drops and customer experience suffers. By understanding the causes of latency, optimizing each pipeline stage, and adopting best practices such as streaming, regional hosting, and domain-specific models, enterprises can build real-time voice experiences that feel natural and human-like.

For organizations looking to accelerate automation, real-time voice AI is no longer optional - it’s a competitive advantage.

FAQ SECTION

1. What is latency in voice AI?

Latency in voice AI refers to the time a system takes to listen, process, and respond. It directly affects voice AI response time and conversational flow. Lower latency makes conversations feel natural.

2. What is considered acceptable latency for voice bots?

Ideal voice bot speed is 250–500 milliseconds. Anything above 800 milliseconds starts to feel slow and robotic.

3. What causes high latency in voice AI?

The main causes include heavy neural models, poor network routing, backend API delays, slow ASR, and sequential processing. Optimizing each layer reduces conversational latency.

4. How do I reduce latency in enterprise voice AI?

Use streaming ASR/TTS, deploy models in regional zones, use domain SLMs, reduce backend calls, and optimize audio codecs.

5. Does using a large LLM increase latency?

Yes. Large models significantly slow response time. Smaller domain models improve performance while maintaining accuracy.

6. How does low latency impact business performance?

Low latency improves customer satisfaction, reduces call abandonment, increases automation success, and boosts ROI.

7. Is latency more critical for banking and finance?

Yes. Banking interactions often involve security checks, KYC, or account queries where delays cause frustration.